awesome-golang-ai

Awesome Golang.AI

| Language | 语言 |

| English | 中文 |

Overview

For a quick overview, please refer to Overview.md.

The repository is a curated list of resources focused on artificial intelligence (AI) and machine learning (ML) development using the Go programming language. It follows the popular “awesome list” format, which aggregates high-quality, community-vetted tools, libraries, frameworks, benchmarks, and educational materials into a single, organized reference.

This repository does not contain executable code or software projects. Instead, it serves as a discovery and reference tool for developers, researchers, and engineers interested in leveraging Go for AI-related tasks. The list emphasizes Go’s strengths in performance, concurrency, and system-level programming, making it particularly valuable for building scalable, production-grade AI applications.

The repository is structured into well-defined categories such as benchmarks, large language model (LLM) tools, Retrieval-Augmented Generation (RAG) components, general machine learning libraries, neural networks, and educational resources. Each entry includes a link to the resource and a brief description of its functionality or purpose.

Scope of the Collection

The awesome-golang-ai list covers a broad spectrum of AI and ML domains, with a strong emphasis on practical tools and evaluation frameworks. Key categories include:

- Benchmarks: Comprehensive evaluation suites for assessing LLM capabilities across various domains such as code generation, mathematical reasoning, multilingual understanding, and real-world software engineering tasks.

- Model Context Protocol Implementations: Resources related to MCP, including SDKs for interacting with major AI platforms (OpenAI, Google, Anthropic), development tools like Ollama for local model execution, agent frameworks, and Go-based implementations of transformer models.

- Large Language Models (LLMs): Resources related to LLMs, including SDKs for interacting with major AI platforms (OpenAI, Google, Anthropic), development tools like Ollama for local model execution, agent frameworks, and Go-based implementations of transformer models.

- RAG (Retrieval-Augmented Generation): Tools for building RAG pipelines, including document parsers for converting PDFs and office files to structured formats, embedding models, and vector databases like Milvus and Weaviate for efficient similarity search.

- General Machine Learning Libraries: Foundational ML libraries in Go that support tasks such as regression, classification, clustering, and data manipulation, including libraries like Gorgonia, Gonum, and Golearn.

- Neural Networks and Deep Learning: Specialized libraries for constructing and training neural networks, including implementations of feedforward networks, self-organizing maps, and recurrent architectures.

- Specialized Domains: Resources for linear algebra, probability distributions, evolutionary algorithms, graph processing, anomaly detection, and recommendation systems.

- Educational Materials: Books, tutorials, and datasets to support learning and experimentation in AI with Go.

The list also includes emerging standards like the Model Context Protocol (MCP), which enables integration between LLM applications and external tools, highlighting the project’s focus on practical, interoperable AI development.

Intended Audience

The primary audience for this repository includes:

- Go Developers exploring AI/ML integration into their applications

- Machine Learning Engineers seeking performant, concurrent backends for AI systems

- Researchers evaluating LLMs or building experimental pipelines in Go

- DevOps and MLOps Engineers interested in deploying AI models in production environments where Go’s efficiency and reliability are advantageous

- Students and Learners who want to study AI concepts through Go implementations

The list is designed to be accessible to users with varying levels of technical expertise. While some entries assume familiarity with AI concepts, the structure and categorization allow beginners to explore resources progressively, from foundational libraries to advanced frameworks.

Contribution Guidelines

Although the repository does not explicitly include a CONTRIBUTING.md file or detailed contribution instructions within the README, it follows standard practices for awesome lists. Users are encouraged to contribute by submitting pull requests to add new, relevant resources or improve existing entries.

Ideal contributions include:

- High-quality, actively maintained projects

- Well-documented libraries and tools

- Benchmarks with published results or academic backing

- Educational resources with practical value

All submissions should be directly relevant to AI/ML development in Go and must provide clear descriptions and working links. The absence of formal guidelines suggests that contributors should follow the existing format and structure of the list when proposing additions.

How to Use This List

Users can leverage the awesome-golang-ai list in several ways:

- Discovery: Browse categories to find tools relevant to specific needs, such as vector databases for RAG or SDKs for calling LLM APIs.

- Evaluation: Use benchmark entries to compare model performance or assess capabilities in areas like code generation or mathematical reasoning.

- Learning: Access books and tutorials to build foundational knowledge in AI with Go.

- Development: Integrate recommended libraries and frameworks into projects to accelerate AI feature implementation.

- Research: Utilize standardized benchmarks and datasets to conduct reproducible experiments.

The hierarchical structure allows users to quickly navigate from broad categories to specific tools, making it easy to locate resources without prior knowledge of the ecosystem.

Value of Curation in the Go AI Ecosystem

Curation plays a critical role in the Go AI ecosystem due to the relatively smaller number of AI-focused libraries compared to languages like Python. By aggregating scattered resources into a single, well-organized list, awesome-golang-ai lowers the barrier to entry for developers interested in using Go for AI.

It promotes best practices by highlighting mature, well-maintained projects and encourages community growth by showcasing innovative tools and research. The list also helps identify gaps in the ecosystem, guiding future development efforts.

Furthermore, as Go gains traction in backend AI services, microservices, and cloud-native applications, having a centralized reference ensures that developers can efficiently build robust, scalable AI systems without sacrificing performance or reliability.

Star History

</a>

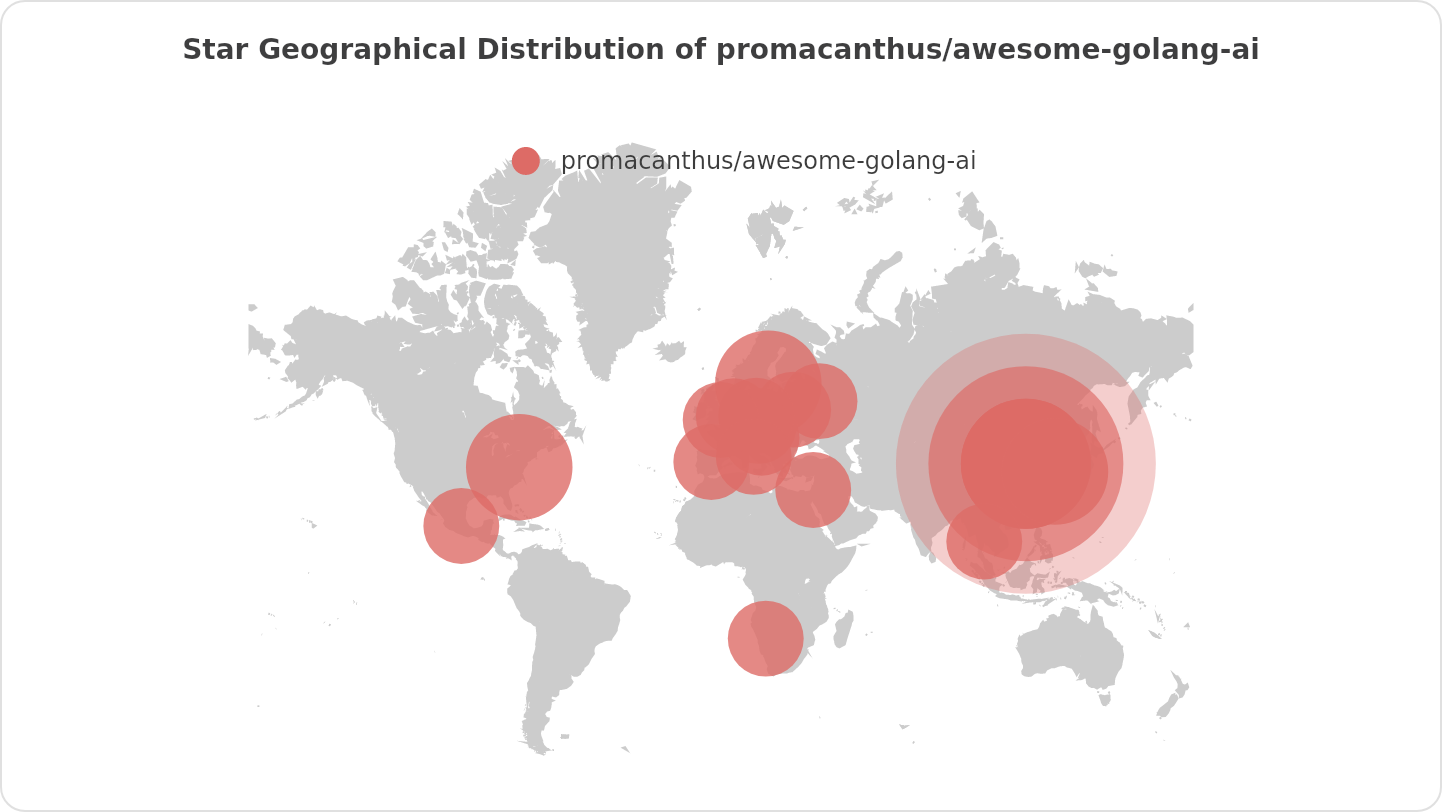

Star Geographical Distribution

</a>